Set-Associative Cache in C#, Part 1: Analysis & Initial Design

A couple of weeks ago, I had never heard of a set-associative cache. Then, I was assigned an interview exercise on HackerRank entitled “Set-Associative Cache Optimization”. (I won’t give away the company or any details about the exercise, since that wouldn’t be fair.) Since I hadn’t heard of such a cache, I decided to learn about it and implement one in C# before I started the assignment, just for practice.

The first thing I learned is that a set-associative cache is more of a hardware concept than a software one, but that they do exist in software. The second thing I learned is that they’re a topic of a fair amount of academic study — I slogged through one paper and queued up a few more in some browser tabs before, ultimately, I decided to implement something fairly straightforward and make it extensible. I find that I learn better by doing than by reading.

Once the project was on my GitHub repository, I decided to write this series of articles, where I walk through the code, describe how the cache works, explain why I made the design decisions I made, and generally show the process of writing a professional-grade data structure for .NET in C#.

In part 1, we’ll try to understand how set-associative caches work and come up with a basic design. In part 2, we’ll expand on the design a bit more and define a code interface for the cache. In part 3, we’ll turn the design into working code.

A Few Words About the Design Process

While these articles will appear to present a somewhat linear process of design and implementation, the actual process was a more iterative and exploratory. I followed the basic steps in this series of articles, but I didn’t do so rigidly. My general approach to designing and developing a new bit of software is the following:

-

Analysis: Understand the problem

- Sketch it out on the whiteboard

- Define the data involved in the problem

- Discover the algorithms and step through them manually

- Consider how the software will be used in production

-

Design: Propose a solution

- More of the steps above, but at a lower level this time

- Consider platforms, languages, libraries

- Examine and compare alternative designs

- Consider usability, performance, testability, and all such requirements

- Look for existing designs and implementations so that I don’t re-invent the wheel

- Talk about and write about the problem and the proposed solution

-

Implementation: Bring the design into reality to solve the problem

- If I get stuck, reconsider the design or the analysis and see if I missed something

“OMG! That’s… that’s… WATERFALL!” No, no, it’s careful consideration of the problem before committing to an implementation. These aren’t rigid phases of any development process handed down from on high. It’s just a reasonable way to approach software development. I may even write exploratory, proof-of-concept code during analysis, and some of that code (maybe all, if I’m lucky) may find its way into the final implementation. I may get all the way into implementation and discover that I need to redo the design. In my experience, the more carefully I understand the problem, and the more diligently I design the solution, the smoother the implementation will be.

Even while writing these articles, I would update the implementation as better ideas occurred to me, and I fixed a few design and implementation bugs along the way as well. If you’re new to software development, don’t worry if you find yourself in a similar kind of loop. A little patience up front, and a willingness to admit later that you didn’t understand something as well as you thought you did, will pay dividends over the course of your career.

How Does a Set-Associative Cache Work?

I’m not going to dive too deeply into caching theory here. As I said earlier, it’s a topic of study for computer scientists. I’ll just present an overview of set-associative caches, and I’ll give you a few links along the way where you can dig deeper.

A set-associative cache is a cache that is split into sets of cache entries, or cache lines. This is a compromise between two other extremes: a direct-mapped cache and a fully associative cache. Each set may have one or more “ways”, where each “way” is single cache entry.

In order to make it easier to visualize how such a cache works, I’m going to use tables that represent a cache with 3 sets and 3 ways per set. Such a cache would be laid out as follows:

| Set 0 | Set 1 | Set 2 | ||||||

|---|---|---|---|---|---|---|---|---|

| Way 0 | Way 1 | Way 2 | Way 0 | Way 1 | Way 2 | Way 0 | Way 1 | Way 2 |

| Data | Data | Data | Data | Data | Data | Data | Data | Data |

When a new cache item is added to the cache, it is assigned to a set, either via an intrinsic tag (like an address range) or some other algorithm, such as a hash function. I won’t get into all the ways that this can happen; instead, I’ll focus on this C# implementation, which uses a hash function on the key in order to choose which set the key/value pair is stored in.

Let’s say, for example, that we want to cache pairs of celebrity couples. Why you would want to do this in real life, I have no idea, but they’re a much more compact form of data to draw on whiteboards or type into WordPress than HTTP responses or database results. When we add an item to the cache with the key “Brad” and the value “Angelina”, the key is hashed using a Fowler-Noll-Vo 1a hash function (FNV-1a). This returns the 64-bit value 7469233897810807560. This huge number isn’t terribly helpful on its own, so we take the number modulo the number of sets (in this example, 3) to determine which set, from 0 to 2, that the item should be placed into. This gives us the number 0, so this key/value pair will go into set 0. There are three ways in each set, but all are empty at the start, so Brad and Angelina will go into the first way (way 0) in the first set (set 0).

| Set 0 | Set 1 | Set 2 | ||||||

|---|---|---|---|---|---|---|---|---|

| Way 0 | Way 1 | Way 2 | Way 0 | Way 1 | Way 2 | Way 0 | Way 1 | Way 2 |

| Brad, Angelina | ||||||||

Let’s add another pair: “Kanye” and “Kim”. “Kanye” hashes to 12815697388183119611 which, modulo 3, yields 2, so Kanye and Kim go into the first empty way in set 2:

| Set 0 | Set 1 | Set 2 | ||||||

|---|---|---|---|---|---|---|---|---|

| Way 0 | Way 1 | Way 2 | Way 0 | Way 1 | Way 2 | Way 0 | Way 1 | Way 2 |

| Brad, Angelina | Kanye, Kim | |||||||

We can keep adding couples until, eventually, we encounter a situation where all three ways in a set are full. If we have added Brad & Angelina, Kanye & Kim, Ben & Jennifer, and Burt & Loni, the cache will look like the following:

| Set 0 | Set 1 | Set 2 | ||||||

|---|---|---|---|---|---|---|---|---|

| Way 0 | Way 1 | Way 2 | Way 0 | Way 1 | Way 2 | Way 0 | Way 1 | Way 2 |

| Brad, Angelina | Ben, Jennifer | Burt, Loni | Kanye, Kim | |||||

What happens if we try to add Kurt & Goldie? Since the hash for “Kurt” modulo 3 is 0, that couple should go into set 0, but that set is already full. What do we do?

This is where the cache replacement policy comes into effect. The cache needs a policy for how to remove existing cache items to make room for new ones. There are several policies to choose from, such as least-recently used (LRU), least-frequently used (LFU), most-recently used (MRU), and many others. If we use a least-recently used, or LRU, policy, then we should remove the item that hasn’t been stored or accessed as recently as any other item in the set. In our example, that will be Brad & Angelina, so they are evicted to make way for Kurt & Goldie:

| Set 0 | Set 1 | Set 2 | ||||||

|---|---|---|---|---|---|---|---|---|

| Way 0 | Way 1 | Way 2 | Way 0 | Way 1 | Way 2 | Way 0 | Way 1 | Way 2 |

| Kurt, Goldie | Ben, Jennifer | Burt, Loni | Kanye, Kim | |||||

We can carry on adding couples to the cache and evicting other couples as necessary until the cache is finally full.

| Set 0 | Set 1 | Set 2 | ||||||

|---|---|---|---|---|---|---|---|---|

| Way 0 | Way 1 | Way 2 | Way 0 | Way 1 | Way 2 | Way 0 | Way 1 | Way 2 |

| Kurt, Goldie | Ben, Jennifer | Burt, Loni | Desi, Lucy | John, Yoko | Tom, Rita | Kanye, Kim | Sonny, Cher | Johnny, June |

If we add a new couple now, then no matter what set the couple ends up in, they will evict the least-recently used couple in that set.

Design

If you’re familiar with the design and implementation of hash tables, you may see some resemblance between the description above and the way that hash tables work. (If you’d like a good introduction to hash table implementation, read Ben Hoyt’s excellent article on implementing a hash table in C.) The key difference here is that in a hash table, the table expands to accommodate new key/value pairs, whereas in a cache there is a fixed capacity for elements and a policy for removing elements when the cache is full.

From the user’s point of view, I decided to make using the cache feel like using hash table (or, more generally, a dictionary). Inserting an element into the cache might be done using the same kind of indexing syntax used in a .NET dictionary, and retrieval of an element might be done with a TryGetValue method.

coupleCache["Brad"] = "Angelina";

string value;

bool hasBrad = coupleCache.TryGetValue("Brad", out value);These are probably the two most common operations on a cache, and they need to be really, really fast. Let’s look at what’s involved in making these operations work.

First of all, consider testing for the presence of a key in the cache. We need to be able to quickly hash the key, find the set the key is in, then search the set for the key. This needs to be done when adding an item to the cache, and it also needs to be done when retrieving a value from the cache. Did I mention that this needs to be really, really fast? If it isn’t significantly faster to find and retrieve an item from the cache than from the original data source, then the cache is pointless.

Let’s consider some possible data structures and algorithms. A naïve implementation might use a linked list of key/value pairs to represent a single set, where the capacity of the linked list would be the number of ways in the set. Here are some pros:

- We can use the existing LinkedList<T> class to store our key/value pairs (using the KeyValuePair<TKey, TValue> class).

- Adding, removing, and inserting items is fairly simple.

- We can easily reorder the linked list if we need to (for example, to maintain removal policy).

There are cons, however:

- We would be using a general purpose class, LinkedList, for a very special purpose.

- Adding, removing, and inserting items is simple, and it’s generally faster in the worst case, but in the normal case it might not be as fast as we need it to be.

- Cache data would be co-located with housekeeping data, which might limit our implementation and performance options.

- Speaking of caches, linked lists are not very friendly to CPU caches.

Let’s look again at the constraints of the cache specification.

- We need to create caches with a fixed numbers of sets, where each set has a fixed number of ways.

- We need to allow clients to tune the numbers of sets and ways to obtain the best performance for their specific scenarios.

- We need to store any type of key and any type of value.

- We need to be FAST!

Let’s consider how users might want to create a cache in the first place. Much like the generic Dictionary type, users may want to provide the specific key and value types, so we’ll design for a generic interface. For our earlier example, which is a cache with string keys, string values, 3 sets of 3 items per set, and a least-recently used replacement policy, the code defining the cache instance might look like the following:

LruCache<string, string> coupleCache = new (sets: 3, ways: 3);If we presume this usage pattern, then we can see that, at compile time, we know the both the total capacity of the cache and the replacement policy for evicting cache items. A fixed capacity is a hint than instead of linked lists, maybe we should consider arrays.

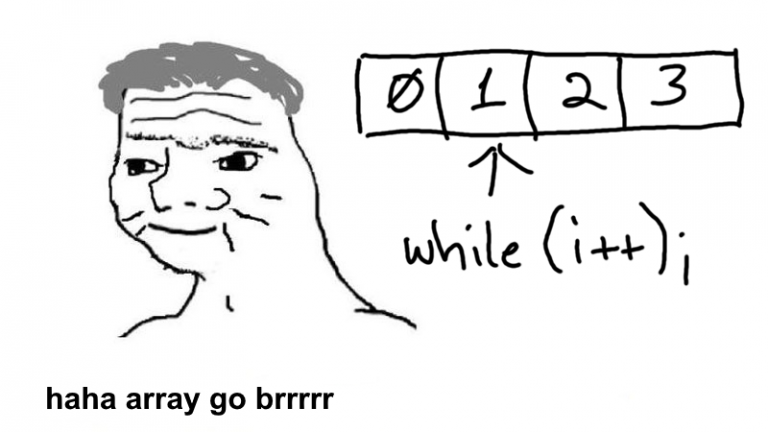

Many of you may already be saying, “NO! Inserting and rearranging items in arrays is SLOW!” Some of you, however, may be nodding and saying, “ Haha array go brrrrr.”

Consider what we need to store, and what state we need to maintain, in order to make the cache work:

- Store the key/value pairs for the cache items.

- Track what items are in what sets.

- Track metadata in each set for maintaining the cache eviction policy.

- Track whether a given set is full.

We know that finding and retrieving a key/value pair has to be as fast as possible. Let’s step through the process again:

- Hash the key

- Find the set that stores the key

- Find which way in the set contains the key

- Use the key to get the value

We’ll consider step 1 to be a fixed cost, although it’s something we can possibly optimize later. Step two is just a modulo operation. Step three implies that we have to search the set for the key, and this is where the proverbial rubber meets the road. One of the advantages of using a set-associative cache is that it can minimize the impact of this step. Rather than searching every element in the cache, we only have to search the number of ways in a set, and we don’t want too many ways in a set anyway.

Let’s consider two arrays. The first array stores the actual data:

KeyValuePair<TKey, TValue>[] valueArray;In our example of celebrity couples, we used a 3-set, 3-way cache. That would mean our value array would have nine elements (3 times 3) when the cache is instantiated, and it would stay that size. When a key/value pair is placed into a location in the array, we want it to stay there until it needs to be removed to make room for another item. We definitely don’t want to do any sorting or insertion on this array, since there is a potential for storing arbitrarily large value types in the array. That means we need to manage the bookkeeping in a separate data structure. Since we already have the keys in this array, we only need to keep track of the indices into this array so that we can quickly find them when needed. Let’s make another array of integers.

int[] keyArray;This array stores the indices to items in valueArray. It is partitioned by set. It doesn’t matter where the actual key/value pairs are stored in the value array, as long as we track them here. (To be completely honest, we’re going to change this slightly in the implementation, but for simplicity we’ll stick with this definition for now.)

Let’s walk through our earlier examples above using this design.

When we create the key array and size it as sets times ways, we get this by default:

| 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 |

|---|---|---|---|---|---|---|---|---|

| 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

That isn’t very helpful, since all of the elements point at the first index in the value array. We need a sentinel value to tell us when a location in the key array is available. We’ll fill the empty array with int.MaxValue when the cache is created or cleared, so that the fresh key array looks like the following in memory:

| 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 |

|---|---|---|---|---|---|---|---|---|

| 0x7FFFFFFF | 0x7FFFFFFF | 0x7FFFFFFF | 0x7FFFFFFF | 0x7FFFFFFF | 0x7FFFFFFF | 0x7FFFFFFF | 0x7FFFFFFF | 0x7FFFFFFF |

That’s a little bit better. This array will easily fit into the CPU cache, which means we can operate on it very quickly.

Now, when we add Brad and Angelina, we can quickly see that the first element in set 0, which also happens to be key-array index 0, is empty. Next, we just need to find an empty spot in the value array where we can place the key/value pair. Uh-oh… how do we do that? Remember, KeyValuePair<TKey,TValue> is a value type, so a fresh array of KeyValuePair<string,string> would look like the following:

| 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 |

|---|---|---|---|---|---|---|---|---|

| [null,null] | [null,null] | [null,null] | [null,null] | [null,null] | [null,null] | [null,null] | [null,null] | [null,null] |

That might work if you assume that a null key, or maybe a null value, indicates an empty slot, but what if your key and value types are integers?

| 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 |

|---|---|---|---|---|---|---|---|---|

| [0,0] | [0,0] | [0,0] | [0,0] | [0,0] | [0,0] | [0,0] | [0,0] | [0,0] |

It’s entirely possible that zero is a valid key and/or value in such a cache. This definition won’t work. We need to make the array items nullable by adding a ? after the type and before the array brackets so that we can distinguish an empty cache slot from an occupied one.

KeyValuePair<TKey, TValue>?[] valueArray; // The ? makes this nullableThat way, by default, the value array is initialized thus:

| 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 |

|---|---|---|---|---|---|---|---|---|

| null | null | null | null | null | null | null | null | null |

Following these steps to add Brad & Angelina, we get the following contents for the key array, where element 0 contains the index in the value array where Brad & Angelina are stored (which also happens to be element 0).

| 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 |

|---|---|---|---|---|---|---|---|---|

| 0 | 0x7FFFFFFF | 0x7FFFFFFF | 0x7FFFFFFF | 0x7FFFFFFF | 0x7FFFFFFF | 0x7FFFFFFF | 0x7FFFFFFF | 0x7FFFFFFF |

Likewise, for the value array:

| 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 |

|---|---|---|---|---|---|---|---|---|

| [Brad, Angelina] | null | null | null | null | null | null | null | null |

After we add Kanye & Kim, we get the following contents for the key array, where now element 6 contains the index for the location of Kanye & Kim in the value array (which, again, also happens to be element 6):

| 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 |

|---|---|---|---|---|---|---|---|---|

| 0 | 0x7FFFFFFF | 0x7FFFFFFF | 0x7FFFFFFF | 0x7FFFFFFF | 0x7FFFFFFF | 6 | 0x7FFFFFFF | 0x7FFFFFFF |

Likewise, for the value array:

| 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 |

|---|---|---|---|---|---|---|---|---|

| [Brad, Angelina] | null | null | null | null | null | [Kanye, Kim] | null | null |

So far it just looks like we have an unnecessary level of indirection to the value array, since the key array elements contain the corresponding indices into the value array, but let’s look at the case where we add another item to a set. Suppose that we want to add Ben & Jennifer. They will end up in set zero next to Brad & Angelina. However, we will need to do some bookkeeping now. If we’re using a least-recently used cache eviction policy, then we need to re-arrange the elements in the key array that correspond to the set so that, if we need to evict a cache item, we can easily find the least-recently used key. Let’s presume that we’ll always put the most-recently used key at the lowest index in the set, and we’ll move the other items to higher indices in the set up to make room for it. That will push the least-recently used key to the highest index in the set.

When we place Ben & Jennifer into set 0 (elements 0, 1 and 2), then in the key array we shift the contents of element 1 into element 2 and element 0 into element 1, and we put the new value index into element 0. That makes Ben & Jennifer the least-recently used cache item in the set. However, when we search for the next available value index in the value array, we find that Ben & Jennifer will be stored at element 1. That means our key array will look like the following:

| 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 |

|---|---|---|---|---|---|---|---|---|

| 1 | 0 | 0x7FFFFFFF | 0x7FFFFFFF | 0x7FFFFFFF | 0x7FFFFFFF | 6 | 0x7FFFFFFF | 0x7FFFFFFF |

The value array, however, will look like the following:

| 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 |

|---|---|---|---|---|---|---|---|---|

| [Brad, Angelina] | [Ben, Jennifer] | null | null | null | null | [Kayne, Kim] | null | null |

When we add Burt & Loni into set 0, we do another shift in the key array so that the value index for Brad & Angelina is stored in the key array’s highest index for set zero, meaning they’ll be up for eviction when another item is added to set zero, unless their key is accessed before then. The key array would look like the following:

| 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 |

|---|---|---|---|---|---|---|---|---|

| 2 | 1 | 0 | 0x7FFFFFFF | 0x7FFFFFFF | 0x7FFFFFFF | 6 | 0x7FFFFFFF | 0x7FFFFFFF |

The value array would look like the following:

| 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 |

|---|---|---|---|---|---|---|---|---|

| [Brad, Angelina] | [Ben, Jennifer] | [Burt, Loni] | null | null | null | [Kayne, Kim] | null | null |

We also need to adjust the indices when cache items are retrieved. With the LRU policy, every time a cache item is accessed, its key should be moved into the lowest index, pushing the rest of the items higher. Consider what would happen if the client code retrieved the item with the key “Brad.” That item’s index (index 0) would be moved into the spot for the most-recently used item:

| 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 |

|---|---|---|---|---|---|---|---|---|

| 0 | 2 | 1 | 0x7FFFFFFF | 0x7FFFFFFF | 0x7FFFFFFF | 6 | 0x7FFFFFFF | 0x7FFFFFFF |

The actual cache data in the value array would not change, however.

You can now see that the indices in the key array float around independently of the actual values in the value array. Because the key array just contains integers and is quite compact, it’s blazing fast to reorder elements in the array, much faster than with a linked list. I know, I’ve tried it.

Let me add a disclaimer for the Yeahbutters and the Whataboutists. I’m not saying that linked lists are never a good idea, or that they never out-perform arrays. I’m saying that in this particular usage scenario, where arrays can make better use of CPU cache and optimizations and intrinsics, arrays generally perform better. One should, however, always benchmark one’s code and test one’s assumptions with real-world data under real-world conditions.

Now that we have the overall design, in the next article we’ll dig a little deeper into the design and look at how to handle alternative cache-eviction policies. Then, we’ll consider what code interface to provide for users of the cache.